Series Overview & ToC | Previous Article | Next Article

After discussing how to avoid entity ID conflicts in the previous article, we are finally ready to start migrating content. The first entity we will focus on is files, covering both public and private file migrations. We will share tips and hacks related to performance optimizations and discuss how to handle files hosted outside of Drupal.

Prerequisites

For this section, we are going to switch to the configuration branch in the repository. This will contain the migrated configuration from the previous section exported to the drupal10/config directory and the tag1_migration_config module disabled. The code for the tag1_migration_config module is still there and can be reviewed for reference, but the migrations related to configuration will not be available in the Drupal installation. This will require customizations to content migrations, which will be explained as needed.

Remember that in addition to using migrations, we also added configuration by applying recipes and making manual changes in the administration interface. The migrations alone cannot reproduce the current state of the site's configuration.

Instead of starting from scratch, we will use the migrations generated by the Migrate Upgrade module. This was explained in article 10. The migration files were copied into the drupal10/ref_migrations folder of the repository. More content migrations were generated than what we will use. Similar to working with the configuration migrations, having them available does not force us to execute them all. We will cherry-pick and customize the generated migrations that will let us import content in accordance with our migration plan.

If you have not done so, clone the repository and switch to the configuration branch. You can always refer to the main branch for the final version of the examples.

git clone https://github.com/tag1consulting/d7_to_d10_migration.git d7_to_d10_migration

git checkout configuration

Moving forward, we will be executing migrations to migrate content from Drupal 7 into Drupal 10. So we need both environments running. Refer to article 8 for an explanation on how to set up the Drupal 7 site. Refer to article 9 for an explanation on how to set up the Drupal 10 site. For a quick reference, use the commands below to get both sites up and running.

For Drupal 7:

cd drupal7

ddev start

ddev launch

ddev drush uli

For Drupal 10:

cd drupal10

ddev start

ddev composer install

ddev composer si # Install the site from existing configuration

ddev launch

ddev drush uli

Entity ID and high water mark considerations

For each content migration, we will have a short section dedicated to considerations about entity ID conflicts and setting a high water mark value. The former we covered in great detail in the previous article. The latter is an optimization for performing incremental migrations.

To avoid entity ID conflicts, we are going to artificially inflate the AUTO_INCREMENT value of entity tables so that entity IDs of imported content from Drupal 7 does not collide with entity IDs of newly created content in Drupal 10.

The base table for the file entity in Drupal 10 is files_managed. There is no revision table for this entity. When deciding the new AUTO_INCREMENT for the table in Drupal 10, consider the highest file ID (fid) in the source site’s file_managed table. Execute the following query against the Drupal 7 project:

-- Get the highest file id value.

SELECT fid FROM file_managed ORDER BY fid DESC LIMIT 1;

For brevity, we are going to use the AUTO_INCREMENT Alter module to apply the new value. Refer to the previous article for more information of how this module works or how to perform the operation in custom code.

$settings['auto_increment_alter_content_entities'] = [

'file' => [350], // Alter the tables for the file content entity.

];

Now execute the command provided by the AUTO_INCREMENT Alter module to trigger the alter operation in the Drupal 10 project.

ddev drush auto-increment-alter:content-entities

As for the high water property, let’s have some context to understand how it is useful. When executing content migrations, the source plugin will build a SQL query to determine what records to fetch and process. When the high_water_property property is set, the migrate API keeps track of the last record that was processed. This is used to limit the total number of records that need to be processed in subsequent runs.

When performing incremental migrations, instead of fetching all records, a condition is added to the query so that only records above the last saved water mark value are retrieved. Records with lower values are assumed to have been processed already.

In our small example project, the performance gains are negligible. In real migrations projects, this could save hours — or quite literally days — when a fully migrated Drupal 10 database is needed.

While premature optimization might not be necessary, it is good to be familiar with performance optimizations baked into the systems we use. Performance, like security, should not be an afterthought.

We need to identify one field returned by the source plugin whose value always increases. Usually, the high_water_property property is set to a timestamp or serial identifier. In the case of files, we can use the file ID (fid). Being the entity’s identifier, its value will keep growing as new files are added to the systems. If an initial migration imports all files up to fid 42, it is safe for an incremental run to only fetch records whose fid is greater than 42.

For files, we will include the following snippet in the source plugin configuration:

source:

plugin: d7_file

high_water_property:

name: fid

alias: f

Technical note: When entities are processed in batches, it is possible for them to be created or updated so fast that timestamp values end up being the same. If available, favor entity revisions over timestamps when selecting the high water property for the content migration. Table primary keys are also good candidates for high water properties.

Please note that the high water property is one of multiple performance optimizations that can be implemented for content migrations. Review this article for more configuration options offered by different source plugins. Some like track_changes and batch_size can also have an impact on performance. We are discussing the high_water_property configuration because it is one of the most impactful for large, incremental migrations.

If you decide to use another approach, be mindful that different configuration options might not play well together. Also, it is totally valid to implement your own performance optimizations via custom source plugins or migration runners. The core API aims to be a general purpose tool and cannot cover every edge case. You and your team have the domain knowledge of the project at hand and can implement tailor-made solutions.

Migrating public files

We use upgrade_d7_file to migrate public files. Copy it from the reference folder into our tag1_migration custom module and rebuild caches for the migration to be detected. If you just reinstalled the site from the configuration branch, the module should already be enabled. If you have been following along performing each migration along the way, you need to disable the tag1_migration_config module and enable the tag1_migration module.

cd drupal10

cp ref_migrations/migrate_plus.migration.upgrade_d7_file.yml web/modules/custom/tag1_migration/migrations/upgrade_d7_file.yml

ddev drush cache:rebuild

# Run if the site was not installed using the configuration branch.

ddev drush pm:uninstall tag1_migration_config

ddev drush pm:enable tag1_migration

Note that while copying the file, we also changed its name and placed it in a migrations folder inside our tag1_migration custom module. After copying the file, make the following changes:

- Remove the following keys: uuid, langcode, status, dependencies, field_plugin_method, cck_plugin_method, and migration_group.

- Add two migration tags:

fileandtag1_content. - Add

key: migrateunder the source section. - Add the

high_water_propertyproperty as demonstrated above.

After the modifications, the upgrade_d7_file.yml file should look like this:

id: upgrade_d7_file

class: Drupal\migrate\Plugin\Migration

migration_tags:

- 'Drupal 7'

- Content

- file

- tag1_content

label: 'Public files'

source:

key: migrate

plugin: d7_file

scheme: public

high_water_property:

name: fid

alias: f

constants:

source_base_path: 'http://ddev-migration-drupal7-web/'

process:

fid:

-

plugin: get

source: fid

filename:

-

plugin: get

source: filename

source_full_path:

-

plugin: concat

delimiter: /

source:

- constants/new_base_path

- filepath

file_exists: 'use existing'

-

plugin: urlencode

uri: uri

filemime:

-

plugin: get

source: filemime

status:

-

plugin: get

source: status

created:

-

plugin: get

source: timestamp

changed:

-

plugin: get

source: timestamp

uid:

-

plugin: get

source: uid

destination:

plugin: 'entity:file'

migration_dependencies:

required: { }

optional: { }

Now, rebuild caches for our changes to be detected and execute the migration. Run migrate:status to make sure we can connect to Drupal 7. Then, run migrate:import to perform the import operations.

ddev drush cache:rebuild

ddev drush migrate:status upgrade_d7_file

ddev drush migrate:import upgrade_d7_file

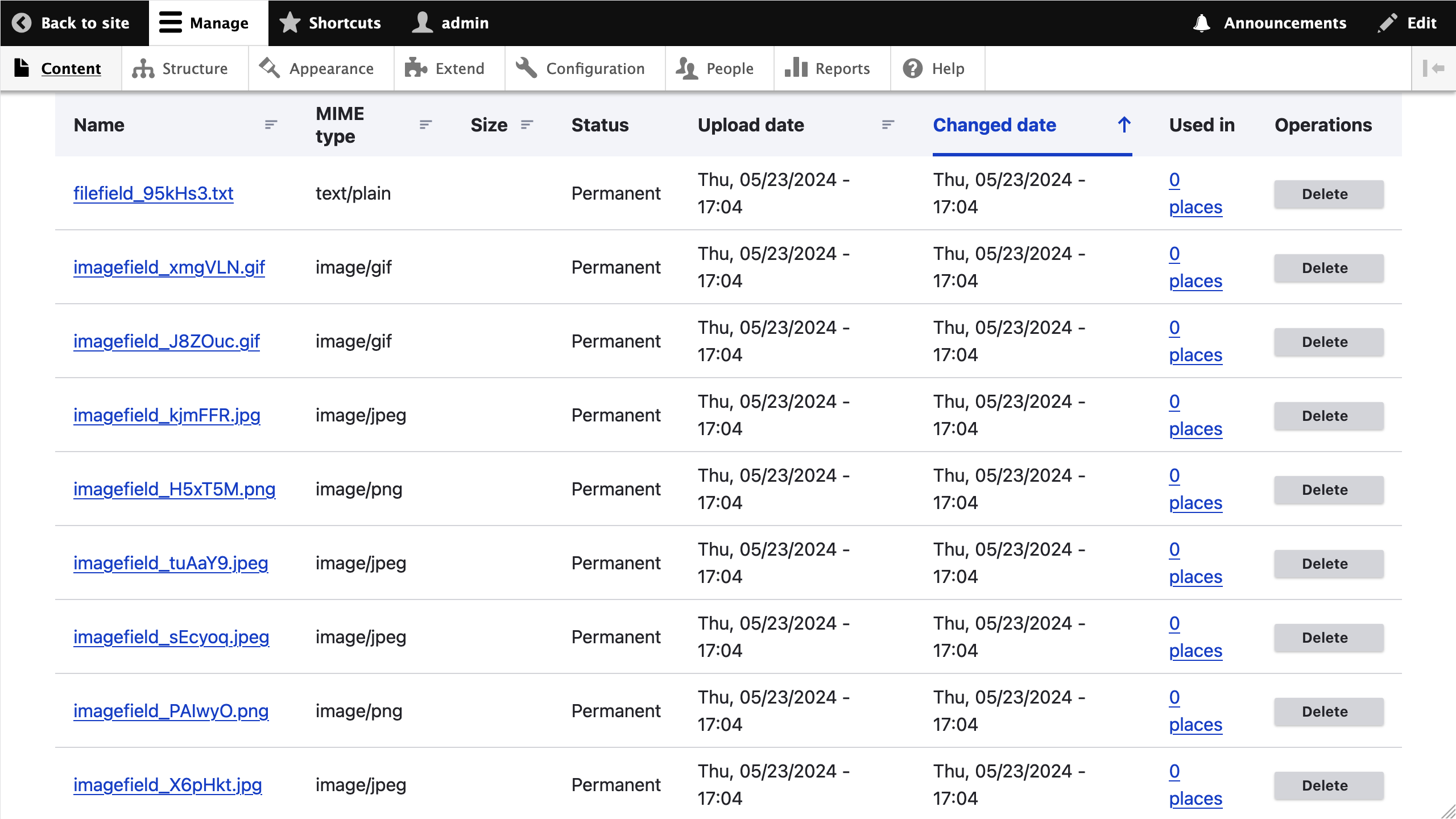

If things are properly configured, you should not get any errors. Go to https://migration-drupal10.ddev.site/admin/content/files and look at the list of migrated files.

Understanding how Drupal file migrations work

Are we done already? Yes, we’ve completed migrating files. Congratulations! But you’re here to gain a deeper understanding of the migrate API, and our next focus will be to address where the files are coming from.

Take a look a closer look at the source configuration of the upgrade_d7_file migration:

source:

key: migrate

plugin: d7_file

scheme: public

constants:

source_base_path: 'http://ddev-migration-drupal7-web/'

To have more context, consider the result of the following query against the Drupal 7 database SELECT * FROM file_managed WHERE uri LIKE "public:/%" LIMIT 1\G:

*************************** 1. row ***************************

fid: 1

uid: 1

filename: druplicon.jpg

uri: public://article/druplicon.jpg

filemime: image/jpeg

filesize: 2890

status: 1

timestamp: 280299600

The scheme configuration option can be set to a string or an array to filter which files are retrieved from the Drupal 7 site. Common values for this setting are public and private to fetch public and private files respectively. If scheme is omitted when using the d7_file source plugin, all files in all supported schemes are retrieved, with the exception of those in the temporary scheme. The upgrade_d7_file migration is configured to retrieve public files only. In the example above, the file id (fid) 1 belongs to the public scheme as evidenced by the uri value: public://article/druplicon.jpg.

The d7_file source plugin expects a value for the constant/source_base_path configuration option. For public files, this can be set to a site address or a local file directory relative to which URIs in the files table are specified. Example values are https://www.tag1consulting.com or /var/www/html/drupal7, respectively. The latter will be much faster than the former — especially if downloading over public internet.

But what does URIs relative to the files table mean? For public files, the d7_file source plugin will perform the following operations when determining the location to retrieve the file from:

- Determine the public file directory in the source site. It will read the

file_public_pathDrupal 7 variable or default tosites/default/files. - Read the value of the

uricolumn and replacepublic:/with the location of the public file directory identified in the previous step. - Convert the result of the previous step to a relative path. The new value is assigned to the

filepathsource property. - Back in our

upgrade_d7_filemigration, we concatenateconstants/source_base_pathandfilepathto obtain the full path of the file to import. The result is stored in thesource_full_pathpseudofield. - The

source_full_pathpseudofield is passed to the file_copy process plugin which takes care of getting the file into Drupal 10. The plugin includes logic for performing a download or copy operation depending on whether the received path represents a remote resource or not.

By applying the above logic, each public file's URI retrieved from the database will be transformed as follow:

-

public://article/druplicon.jpggets converted tosites/default/files/article/druplicon.jpginside thed7_filesource plugin. -

sites/default/files/article/druplicon.jpggets converted tohttp://ddev-migration-drupal7-web//sites/default/files/article/druplicon.jpgas part of the process pipeline of theupgrade_d7_filemigration. - The file at

http://ddev-migration-drupal7-web//sites/default/files/article/druplicon.jpgis copied intopublic://article/druplicon.jpg, which translates to/var/www/html/web/sites/default/files/article/druplicon.jpg.

The location in Drupal 10 would be different if you configured the file_public_path setting in web/sites/default/settings.php file inside the drupal10 folder. Otherwise, this settings defaults to sites/default/files.

Technical note: The d7_file source plugin is provided by the \Drupal\file\Plugin\migrate\source\d7\File class. The upgrade_d7_file migration is derived from core/modules/file/migrations/d7_file.yml. We highly recommend looking at the source code for both to get a better idea of what is going on under the hood. Even if you do not understand all the technical details, the files include helpful comments about how things work and how they can be configured.

One more thing to point out is where http://ddev-migration-drupal7-web/ came from. That is the result of an action we took back in article 10 when we executed the following Drush command to generate the migrations with the Migrate Upgrade module:

ddev drush --yes migrate:upgrade --legacy-db-key='migrate' --legacy-root='http://ddev-migration-drupal7-web'

During the generation of migration configuration entities, the value of the --legacy-root command line option is injected into the source/constants/source_base_path configuration of migrations that create file entities like upgrade_d7_file and upgrade_d7_file_private. See \Drupal\migrate_upgrade\MigrateUpgradeDrushRunner::applyFilePath for details.

Migrating private files

Migrating private files follows a similar approach. The primary difference is that private files, in theory, are not web accessible. As such, the file_copy process plugin will not be able to retrieve the file if constants/source_base_path is set to a public domain. Instead, the Drupal 7 files need to be copied over to the same server where Drupal 10 is running. And then, configure the private files migration to request files via a local path like /var/www/html/private_files.

When copying the files, it is important that the private files are placed outside Drupal 10's webroot or otherwise prevent access to that folder at the web server level. Before discussing how to migrate private files, note that you can also migrate public files from a local directory in Drupal 10’s server. A local file copy operation will be much faster than having to download a remote file, especially when considering thousands of files and hundreds of GBs of data.

Our example repository does not make use of private files so setting up private files will be left as an exercise to the curious reader. See https://www.drupal.org/docs/7/core/modules/file/overview for instructions on how to do it in Drupal 7. We are going to proceed under the assumption that the private file system path is set to /var/www/html/private_files.

The first step to migrate private files would be to copy the folder containing them. A common way to do it is using rsync to move files from one server to another or from a server to a local environment. Some hosting providers will provide a compressed *.zip or *.tar.gz file with the content of user uploaded files. One way or another, we need to get the Drupal 7 private files into a location where Drupal 10 can access them.

In our example project, Drupal 7's /var/www/html/private_files path inside DDEV's web container will map to drupal7/private_files in your host machine. And Drupal 10's /var/www/html/d7_private_files path inside DDEV's web container will map to drupal10/d7_private_files in your host machine.

The following commands can be used to simulate having Drupal 7 private files and move them to a local folder in Drupal 10's server. We are creating a file that will eventually be migrated.

# Location of private files in Drupal 7.

mkdir -p drupal7/private_files

# Location of Drupal 7 private files that were moved into Drupal 10's web server.

mkdir drupal10/d7_private_files

# Location of private files in Drupal 10.

mkdir drupal10/new_private_files_location

# Create a test private file.

echo "Do not tell anyone." >> drupal7/private_files/secret.txt

# Copy private files from Drupal 7 into Drupal 10's web server.

cp -r drupal7/private_files/. drupal10/d7_private_files

# Verify that the files were copied into the location where the private files migration expects it.

cat drupal10/d7_private_files/secret.txt

Assuming we have records for private files in the database, consider the result of the following query SELECT * FROM file_managed WHERE uri LIKE "private:/%" LIMIT 1\G:

*************************** 1. row ***************************

fid: 54

uid: 1

filename: secret.txt

uri: private://secret.txt

filemime: text/plain

filesize: 4

status: 1

timestamp: 979534800

Now we need to configure private files in Drupal 10. Refer to the online documentation for more information on configuring and securing access to private files. In short, we will add the following to the web/sites/default/settings.php file inside the drupal10 folder.

$settings['file_private_path'] = $app_root . '/../new_private_files_location';

Now that we have private files configured in Drupal 7 and 10 plus records in the file_managed table in the source site, we can proceed with the migration. We use upgrade_d7_file_private to migrate private files. Copy it from the reference folder into our tag1_migration custom module and rebuild caches for the migration to be detected.

cd drupal10

cp ref_migrations/migrate_plus.migration.upgrade_d7_file_private.yml web/modules/custom/tag1_migration/migrations/upgrade_d7_file_private.yml

ddev drush cache:rebuild

Note that while copying the file, we also changed its name and placed it in a migrations folder inside our tag1_migration custom module. After copying the file, make the following changes:

- Remove the following keys: uuid, langcode, status, dependencies, field_plugin_method, cck_plugin_method, and migration_group.

- Add two migration tags:

fileandtag1_content. - Add

key: migrateunder the source section. - Add the

high_water_propertyproperty as demonstrated above. - Change the value of

source/constants/source_base_pathto/var/www/html/private_files. - Add a new source constant named

new_base_pathand set its value to/var/www/html/d7_private_files. - Update the first element of the

process/source_full_path/0/sourceconfiguration to- constants/new_base_path.

The last three steps are very important. source_base_path defines the location where the private files were in Drupal 7's server. new_base_path defines the location where the private files were copied into Drupal 10's server. After the modifications, the upgrade_d7_file_private.yml file should look like this:

id: upgrade_d7_file_private

class: Drupal\migrate\Plugin\Migration

migration_tags:

- 'Drupal 7'

- Content

- file

- tag1_content

label: 'Private files'

source:

key: migrate

plugin: d7_file

scheme: private

high_water_property:

name: fid

alias: f

constants:

source_base_path: '/var/www/html/private_files'

new_base_path: '/var/www/html/d7_private_files'

process:

fid:

-

plugin: get

source: fid

filename:

-

plugin: get

source: filename

source_full_path:

-

plugin: concat

delimiter: /

source:

- constants/new_base_path

- filepath

uri:

-

plugin: file_copy

source:

- '@source_full_path'

- uri

filemime:

-

plugin: get

source: filemime

status:

-

plugin: get

source: status

created:

-

plugin: get

source: timestamp

changed:

-

plugin: get

source: timestamp

uid:

-

plugin: get

source: uid

destination:

plugin: 'entity:file'

migration_dependencies:

required: { }

optional: { }

Now, rebuild caches for our changes to be detected and execute the migration. Run migrate:status to make sure we can connect to Drupal 7. Then, run migrate:import to perform the import operations.

ddev drush cache:rebuild

ddev drush migrate:status upgrade_d7_file_private

ddev drush migrate:import upgrade_d7_file_private

If things are properly configured, you should not get any errors. Go to https://migration-drupal10.ddev.site/admin/content/files and look at newly migrated private files.

For private files, the d7_file source plugin will perform the following operation when determining the location to retrieve the file from:

- Determine the private file directory in the source site. It will read the

file_private_pathDrupal 7 variable. No default is used if the variable is not set. - Read the value of the

uricolumn and replaceprivate:/with the location of the private file directory identified in the previous step. - Convert the result of the previous step to a relative path. The new value is assigned to the

filepathsource property. - Back in our

upgrade_d7_file_privatemigration, we concatenateconstants/new_base_pathandfilepathto obtain the full path of the file to import inside Drupal 10's web server. The result is stored in thesource_full_pathpseudofield. - The

source_full_pathpseudofield is passed to the file_copy process plugin which takes care of getting the file into Drupal 10. In this case, the plugin will understand that the location is not a remote resource.

A file copy operation will place the file into Drupal 10's private file folder: /var/www/html/web/../new_private_files_location. This is the same as:

-

/var/www/html/new_private_files_locationinside DDEV’s web container, and -

drupal10/new_private_files_locationin your host machine.

By applying the above logic, each private file's URI retrieved from the database will be transformed as follow:

-

private://secret.txtgets converted to/var/www/html/private_files/secret.txtinside thed7_filesource plugin. -

/var/www/html/private_files/secret.txtgets converted to/secret.txtinside thed7_filesource plugin. -

/secret.txtgets converted to/var/www/html/d7_private_files//secret.txtas part of the process pipeline of theupgrade_d7_file_privatemigration. - The file at

/var/www/html/d7_private_files//secret.txtin Drupal 10's server is copied intoprivate://secret.txt.

The private://secret.txt Drupal 10 URI the same as:

-

/var/www/html/new_private_files_location/secret.txtinside DDEV’s web container, and -

drupal10/new_private_files_location/secret.txtin your host machine.

Importing files from a local copy

The new_base_path source property is only necessary if the location of the private files in Drupal 7 is different to the location where the such files are copied inside Drupal 10's web server. In our example, the Drupal 7 private files lived in /var/www/html/private_files and were copied into /var/www/html/d7_private_files in preparation for the migration.

You might be tempted to place the files directly where they will ultimately live in Drupal 10 and bypass the file_copy operation. In our example, that would be /var/www/html/new_private_files_location. However, it is better not to do this, because there might be modules altering the uri property of the retrieved file before saving the file entity. Use cases for this include changing the schema of files or reorganizing their folder structure. In such cases, the real location on disk will not match the expected location as stored in Drupal 10's file_managed table.

A similar approach of copying the files into Drupal 10's web server can be used to speed up public file migrations. Apply the following changes to the upgrade_d7_file migration:

- Change the value of

source/constants/source_base_pathtosites/default/files. This should match the value of Drupal 7'sfile_public_pathvariable. Use thesites/default/filesif the variable is not set. - Add a new source constant named

new_base_pathand set its value to/var/www/html/d7_public_filescontains a copy of Drupal 7's public file folder. - Update the first element of the

process/source_full_path/0/sourceconfiguration to- constants/new_base_path.

Before going any further, I want to assert that, yes, keeping track of all the different locations involved file migrations can be quite hard. While writing this article, I had to thoroughly review the code of the d7_file source plugin and the file_copy process plugin, inspect how the file migrations were generated, tweak such migrations, and step debug through the whole process to piece together something that works.

One thing is for sure. You can come up with a different set of file locations and still migrate files properly. Nothing stops you from creating custom source or process plugins, or coming up with different process pipelines. If so, refer back to this article for inspiration.

Remember to review the migration system's issue queue if you encounter problems. Asking for support in the #migration channel in Drupal slack is a good way to get community support.

Performance considerations

When there is a large number of files amounting to hundreds of GBs of data, the file migrations can take a very long time to complete. The best way to speed up the process is copying the Drupal 7 files into the Drupal 10 server and using that as the source of the migration.

Another optimization is passing an additional configuration option to the file_copy plugin to prevent multiple downloads of the same file. Adding file_exists: 'use existing' will check if a file with the same name exists in the destination location. If so, the file_copy plugin bails out without downloading or copying the file again. Using previously migrated files should not be a problem because, at the moment, it is not possible to replace files once they are uploaded to Drupal. For reference on how to apply this configuration, see the following snippet:

process:

uri:

-

plugin: file_copy

source:

- '@source_full_path'

- uri

file_exists: 'use existing'

The two techniques explained above apply to public and private migrations. They are also safe to use when running a full migration to prepare a production-like Drupal 10 database. If you want to know some tips and hacks for developing locally, continue reading.

Note: While not related to the above, if you are using mutagen with DDEV on macOS, configure the upload_dirs setting.

Don't try these techniques at home

Congrats on making it this far into the article! As a reward, I will share some insights that might reduce the wait times for file migrations during local development. Just promise me two things:

- Never do this when running a migration in production. Never.

- If someone asks, don't tell them that you heard of these techniques from me. ;-)

Tip 1: Not migrating files

The first tip to speed up the migration of files is not migrating any file. Let me elaborate. It is possible to migrate a reference to a file without getting the file on disk. Instead of using the file_copy plugin, you make a verbatim assignment of the uri property from source to destination like this:

process:

uri: uri

Assuming a public file located at public://dinarcon.jpg in Drupal 7, the migration will store a reference in Drupal 10 with the same URI value in its file_managed table. Any user trying to retrieve the file will get a file not found error. But as far as the migration is concerned, the references to the files have been migrated and the file entities created. This is also enough to consider the migration completed. Other migrations that depend on files will not fail with the error: did not meet the requirements. Missing migrations upgrade_d7_file.

The above technique can be supplemented with the Stage File Proxy module when performing public file migrations. In our example, you can install, enable, and configure the module with the following commands:

composer require drupal/stage_file_proxy

drush pm:enable stage_file_proxy

drush config:set stage_file_proxy.settings origin 'http://ddev-migration-drupal7-web'

When a request comes for a file missing in Drupal 10, the module will try to retrieve it from the origin domain. This would not work for migrating private files because they are, in theory, not web accessible. I have seen conversations about “temporarily” removing access control to private files, but that is an action with very real security risks. Don't do that. Please do not shoot yourself in the foot. If you decide to do it anyways, put the source and destination sites behind a firewall when executing the private files migrations.

Tip 2: Hacking Drupal core

The next technique involves hacking Drupal core. What? I didn’t say it would be pretty. Joking aside, hacking Drupal core means modifying the files that comprise the base framework. This is useful if you need to rollback file migrations. In many cases, rolling back a migration triggers the delete operation on the imported entities. Deleting a file entity removes all references to the file and removes the file from disk.

When a file migration is rolled back, the files need to be downloaded again the next time the migration is executed. Not a big deal in our rather simple example. But when dealing with large file directories deleting and re-downloading files might equate to hours of waiting.

To prevent the files from being removed from disk upon rollback, edit the \Drupal\file\Entity\File::preDelete method at web/core/modules/file/src/Entity/File.php inside the drupal10 folder:

public static function preDelete(EntityStorageInterface $storage, array $entities) {

parent::preDelete($storage, $entities);

foreach ($entities as $entity) {

// ...

// Delete the actual file. Failures due to invalid files and files that

// were already deleted are logged to watchdog but ignored, the

// corresponding file entity will be deleted.

try {

// Comment out or remove the line below.

// \Drupal::service('file_system')->delete($entity->getFileUri());

}

catch (FileException $e) {

// Ignore and continue.

}

}

}

This is a bad idea on so many levels. You are altering a core behaviour. The removal of disk will be skipped not only during migration rollbacks, but whenever Drupal invokes an entity delete operation on files. You could forget that you made this change and introduce bugs in other parts of the system. You could lose your hack in the next update of Composer packages. You should definitely not try this — but if you do, create a patch with the hack and apply it using cweagans/composer-patches. A composer update won’t override the patch and it provides more visibility. You’re less likely to forget the hack was applied when you see the patch in your composer file.

I guess I cannot take back the tips that I shared. If you decide to implement any of the techniques discussed in this section, exercise extra caution. Remember: never do any of them in a production environment. They are meant to be used exclusively during local development.

Migrating files hosted outside of Drupal

If you are using an external service to host your files, you need to account for more things when migrating them. Let's say you are using the S3 File System module to store files in an Amazon S3 bucket. Ask yourself the following questions:

- Will you share the same bucket between Drupal 7 and 10? If you do, and you do not need to change the path structure, you could migrate the

uriproperty verbatim as discussed above. Warning: When using shared buckets, rolling back the migration in Drupal 10 will trigger the deletion of the file in the bucket. This will also affect Drupal 7! If you decide to use shared buckets, make sure to never rollback a migration in an environment that points to a production bucket. This is easier said than done. You could probably subscribe to the MigrateEvents::PRE_ROLLBACK event and throw an exception to prevent further processing. Or better yet, never use shared buckets. - If not using the shared buckets, how will you move the files from one to another? Many providers offer tools to bulk copy files between buckets. Some providers even offer migration tools from external providers.

- Does Drupal 10 have write access to the new bucket? It might sound obvious, but verify access controls are properly set up. You could have copied an image file into the new bucket with an external tool. When there is a need to generate an image style, Drupal might not be able to create it if permissions are misconfigured.

- Do you need to enable CORS-based uploads? Not directly related to the migration operation, but it might be a requirement carried over from the Drupal 7 site. The S3 File System CORS Upload module can help with this.

Is there more to file migrations?

We can certainly keep going, but this article is long enough already. Instead, I invite you to listen this podcast that discusses complex file and media migrations: https://www.tag1consulting.com/blog/moving-drupal-7-drupal-10-managing-complex-file-and-media-migrations

Before wrapping up, let me highlight one more thing. Take another look at the final upgrade_d7_file.yml file. The changes we made to that migration do not change the import logic in a meaningful way. We could have been able to import the public files without making any modifications to the generated migration. Drupal can be adapted to fulfill very niche requirements, but it can do so much with little or no customization.

We covered a lot of ground today, but don’t feel that you need to understand every detail of how the migrate API works. Yes, it is a fantastic, flexible, and powerful tool. It is also a complex one. In my case, I learn something new every time I interact with it.

Whew! We’re done with files for now. In the next article, we will move on to migrating users and taxonomy terms.